In case you hadn’t noticed, there has been lots of press in the past week about the rumor that Google is working on a pair of HUD glasses. I don’t doubt it, but having asked a several Googlers about this at CES, I invariably got a sarcastic reply along the lines of “yup, and we’ve also got a space elevator coming out later this year.” But I’ve never spoken to somebody from Google X. I did hear an account of Sergei Brin spending a nice chunk of time at the Vuzix booth at the show, so HUD glasses are clearly on their radar, if nothing else.

One should note that there has been mention of image analysis being performed using cloud resources in Google’s scenario. This is part of the scenario that I envisioned after hearing Microsoft’s Blaise Aguera y Arcas introduce Read/Write World at ARE. While I haven’t heard anything about it since, I wouldn’t be surprised if it pops back up this year. What I think will happen is that a wearable system will periodically upload an image to a server that will use existing photographic resources to generate a precise homography matrix pinning down the location of the camera at the time that the image was taken. The GPS metadata attached to the image will provide the coarse location fix necessary to select a relatively small dataset against which to compare the image. Moment to moment tracking will be done using a hybrid vision and sensor-based solution. But at least in the first generation of such systems, and in environments that don’t provide a reference marker, I expect cloud-based analysis to be a part of generating the ground truth against which they track.

Let’s do a little recap of some of the most notable HUD glasses options these days:

Vuzix STAR1200 – I got to try these out at ARE back in June of last year and was quite impressed. I’ve since picked up a pair and love them, with some caveats. Because they use a backlit LCD micro-displays as opposed to an emissive technology like OLEDs, you don’t get perfect transparency in areas where the signal source is sending black. That means that if the glasses are on and you are sending them a blank black screen, you still see a slight difference between the display area and your peripheral vision. Also, the field of view (FOV) of the display area could definitely stand to be a little larger. The STAR1200 is intended primarily as a research and development device, and is priced accordingly at $5000. The device comes with a plethora of connectors for different types of video sources, including mobile devices such as the iPhone. The STAR1200 is the only pair of HUD glasses that I know of that come with a video camera. The HD camera that it originally shipped with was a bit bulky, but Vuzix just started shipping units that come with a second alternate camera which is much smaller and can be swapped out. The glasses also ship with an inertial orientation tracking module. Vuzix recently licensed Nokia’s near-eye optics portfolio and will be utilizing their holographic waveguide technology in upcoming products that will be priced for the consumer market.

Lumus Optical DK-32 – I finally got to try out a Lumus product at CES, and was quite impressed. I’ve spoken with people who have tried them in the past and, based on my experience, it looks like they’ve made some advances. The FOV was considerably wider than that on the Vuzix glasses, and both contrast and brightness seemed to be marginally superior. That said, you as an individual can’t buy a display glasses product from Lumus today, and they are very selective with respect to whom they’ll sell R&D models. You can’t buy the glasses unless you’re an established consumer electronics OEM, and it would set you back $15k even if you could get Lumus to agree to sell you a pair. I’ve heard that part of the issue is the complexity of their optics manufacturing process. As I was several years ago, I’m looking forward to seeing a manufacturer turn the Lumus tech into a consumer product.

Seiko Epson Moverio BT-100 – I’m rather ashamed that I didn’t know about this device before heading to CES, and so didn’t get to hunt them down and try them. I love that these come with a host device running Android. I can’t, however, find mention of any sort of video input jack. It’s a shame if they have artificially limited the potential of these ¥59,980 ($772) display glasses. Also, with a frame that size, I’m genuinely surprised that they didn’t pack a camera in there. I’m looking forward to getting a chance to try these out.

Brother Airscouter – Announced back in 2008, Brother’s Airscouter device has found its way into an NEC wearable computer package intended for industrial applications.

I don’t mean to come off as a fanboy, but I like Vuzix a lot. This is primarily because they manage to get head-mounted displays and heads-up displays into the hands of customers despite the fact that this has consistently been niche market. I have to admire that kind of dedication to pushing for the future that we were promised. I also love that they are addressing the needs of augmented reality researchers specifically. It will be interesting to see how these rumors about Google will affect the companies that have been pushing this technology forwards for such a long time. I’m hoping that it will help broaden and legitimize the entire market for display glasses, which have long been on the receiving end of trivializing jokes on the tech blogs and their comment threads.

The Strong, Weak, Open, and Transparent

So, it’s been a couple of years since I’ve felt compelled to post to this blog, but I think it’s high time for an update. I’m just going to quickly touch on a few of things I’m excited about, having just attended Augmented Reality Event 2011.

Things in the Augmented Reality world have progressed rapidly, if not as rapidly as I might once have imagined they would. In one of my first posts, I closed with an idea about streaming one’s first-person POV to a giant Microsoft Photosynth system in the cloud. The Bing Maps team, under Blaise Aguera y Arcas and Avi Bar-Zeev, is doing exactly that. With Read / Write World, Microsoft is developing what I think will be the foundation of what Blaise called “Strong AR.” This is in contrast with the “weak,” strictly sensor-based AR applications that we’re seeing on mobile devices at the moment.

To clarify, there are two paradigms of current AR usage:

One of these two is local vision-based AR using marker or texture tracking to position virtual objects relative to a camera’s perspective. This is done by calculating the homography that describes the relationship between the captured image of the tracked pattern, and the original pattern. From this, one generates translation and orientation matrices for the placement of virtual content in the scene. This is Strong AR, but on a local scale and without a connection to a coordinate system linked to the world as a whole.

The other is the AR found in most mobile apps like Layar and Wikitude. The information visualized through these apps is placed using a combination of geolocation and orientation derived from the sensors found in smartphones. These sensors are the components of a MARG array: triaxial magnetometric, accelerometric, and gyroscopic sensors. By knowing a user’s position and orientation, which are together referred to as a user’s pose, one nominally knows what a user is looking at, and inserts content into the scene. The problem with this method is one of resolution and accuracy, and this is what Blaise was referring to as “weak.” This method, however, provides an easy means by which to place data out in the broader world, if not with precise registration.

The future of Strong AR is the fusion of these two paradigms, and this is what Read / Write World is being developed for. The underlying language of the system is called RML, or Reality Markup Language. Already, if photographic data for a location exists in the system, and one uploads a new image with metadata placing it nearby, the Read / Write World can return the homography matrix. According to Blaise’s statements during his Augmented Reality Event keynote, pose relative to the existing media is determined with accuracy down to the centimeter. And the new image becomes part of the database, so users will constantly be refining and updating the system’s knowledge of the world.

Anyhow, I think Read / Write World has the potential to be the foundation for everything that I, and so many others, have envisioned. That’s on the infrastructure side.

So what about the hardware?

In the last couple of years, mobile devices have really grown up, and are getting to, or have reached, the point where they pack enough processing power to be the core of a real Strong AR system. Qualcomm has positioned itself as one of the most important entities in Augmented Reality, providing an AR SDK optimized for their hardware, on which most Android and Windows Mobile platforms are based. In a surprising move, at ARE, they announced that they are bringing their AR SDK to the iOS platform as well.

With peripheral sensor support and video output, we’ve got almost everything we need to be able to connect a pair of see-through display glasses (more on those in a little bit) to one of these mobile devices for AR experience. But the best that those connections can provide is a “weak” AR experience. Why? Because the connectors don’t support external cameras. True, there are devices like the Looxcie, but the resolution and framerate are paltry, and are a limitation of the Bluetooth connection. On top of that, the integrated cameras in mobile devices are wired at a low-level to the graphics cores of their processors and dump the video feed directly into the framebuffers, facilitating the use of optimized processing methods, such as Qualcomm’s. What we need is the inclusion of digital video input in the device connectors, providing the same sort of low-level access to the video subsystems of the devices. This is absolutely vital to being able to use visual information from the camera(s) on a pair of glasses for their intended purpose of real-time pose estimation.

So… glasses…

At ARE I got to try out a Vuzix prototype that finally delivers what I’d hoped to see with the AV920 Wrap. The new device is called the STAR 1200, for See-Through Augmented Reality. It looks a little funny in the picture, but don’t worry about the frame. The optical engine is removable and the final unit’s frame will probably look substantially different. It provides stereo 852×480 displays projected into optically see-through lenses and, let me tell you, it looks good. It is a great first step towards something suitable for mass adoption. The limited field of view coverage means that it won’t provide a truly immersive experience for gaming and the like, but again, it is a great first step. Now before I get your hopes up, this device will be priced for the professional and research markets, like the Wrap 920AR. Vuzix isn’t a big enough company to bust this market open on its own. But once apps are developed and the market grows, we’ll see this technology reaching consumer-accessible price points. I’m going to refrain from predictions of timeframe this time around, but I think that things are very much on track. Also, keep in mind that this is a different technology than the Raptyr, the prototype that Vuzix showed at CES this year. The Raptyr’s displays utilize holographic waveguides, while the STAR 1200 is built around more traditional optics. I did get to see another Vuzix prototype technology in private, and can’t say anything about it, but it is very promising.

One last development that has me very excited is Google’s new Open Android Accessory Development Kit. It’s based on the Arduino platform, making it instantly accessible to hundreds of thousands, if not millions, of existing experimenters, developers, and hardware hackers, including myself. This opens up all kinds of possibilities for custom human interface devices.

Okay. That’s it for today, but I’ll write again soon. I promise.

ISMAR… Where to start…

First, thank you to the awesome people, especially Sean White of Columbia University, who helped make it possible for me to be there.

Right now I”m just going to give you the beginning of my takeaway.

The paper that resonated most with my basic desire to see the big platform problems handled first was “Global Pose Estimation using Multi-Sensor Fusion for Outdoor Augmented Reality” by Gerhard Schall, Daniel Wagner, Gerhard Reitmayr, Elise Taichmann, Manfred Wieser, Dieter Schmalstieg, and Bernhard Hofmann-Wellenhof, all out of TU Graz, Austria, with the exception Mr. Reitmayr, who is at Oxford. This is the kind of fusion work that I’ve been talking about since my first post, and it was really exciting to see people actually doing it seriously on the hardware side. The two XSens MTi OEM boards headed to the new lab for a non-AR project should have cleared customs by now. I’ll find out if they’re there on Tuesday. 🙂 I only mentioned it because it’s more-or-less the same device that was used for the inertial portion of this project, and I can’t wait to build them into something.

I also loved reading Mark Livingston’s paper on stereoscopy.

Incidentally, all of the papers, and video of all the sessions, should be getting posted soon to ISMARSociety.org. Serious props to the student volunteers who appeared to really keep things running smoothly, and who performed the awesome task of capturing all of the content on video. This, the first year of AR as a popular buzzword, is the time to share with the rest of the world just how much scientific effort is going into making real progress.

I’ve got lots to say about the HMDs, including Nokia Reasearch Center’s cool eye-tracking see-through display sunglasses prototype, but I’m going to save it for tomorrow, or perhaps for another forum. For the moment, just enjoy this photograph of Dr. Feiner stylishly rockin’ the Nokia prototype.

Hell yeah, dude.

Stars of the AR Blogosphere

Though we were still notably lacking Tish Shute and Rouli, this pic has a pretty stacked roster of AR blogosphere heavy-hitters in it. And speaking of Tish, I think she may be onto something with the AR Wave initiative. The diagram in her most recent post makes a great deal of sense.

And sorry to flake on the daily updates. I did end up demoing some glove stuff, and I was just generally pretty wiped out by the time I got back to my hotel each evening. ISMAR was terribly exciting for me, and have a ton more to recount.

ISMAR

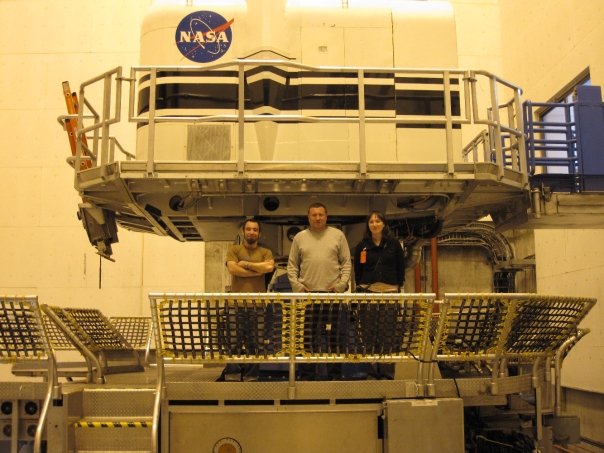

After having my cab get rear-ended on the way to JFK, and sitting on the runway for half an hour in a plane full of crying and whining kids, I’m finally in the air on the way to Orlando for ISMAR. Unfortunately it is sans a mature demo. I wasn’t able to get a built set of my hardware sent to Seac02 in time for them to integrate it. Actually, it’s because I got a bit distracted by my new job, for which I was out at Ames, week before last, assisting with a set of tests in the Vertical Motion Simulator. I know ISMAR is a big deal, but one doesn’t get many chances to play with that kind of hardware.

Anyhow, I tried to use my free time to work on the project, but things just didn’t really come together without being in my lab at home.

So I’m off to ISMAR without my project in the shape that I’d intended, but I’m actually thinking that that’s just as well. What I do have is a press badge, and rather than trying to impress the guys with the big brains with my little DIY VR project, I’m going to try to learn and see as much as I can this week, and blog about it every chance I get.

As always, if you want to hear the latest and greatest news in the field, head over Ori Inbar’s blog at www.gamesalfresco.com. The big news right now is that the private API code for accessing the iPhone camera frame buffer is now being freely distributed, and Ori and company are the ones giving it out!

Starting tomorrow, you might want to begin checking back here daily to find out what I’m seeing at ISMAR, and what I make of it all. And if you’re at the conference, drop me an email if you’re interested in meeting up or have something you want me to see.

And if you want to try your code on an ION-powered netbook, I’ll be driving my VR920 and CamAR with my shiny new HP Mini 311 😉

A Conversation With Paul Travers of Vuzix

I just had a very interesting conversation with Paul Travers, CEO of Vuzix.

Paul explained several things to me, including that it was a mistake on their part to keep a name so similar to the AV920 Wrap when creating the Wrap 920. The Wrap product line is distinct from those previously shown, including the AV920 Wrap. There is no denying that the pictures of the Wrap series now posted on the Vuzix website do suggest that, when it is released, it will be far and away the most attractive looking “video eyewear” device to be brought to market. Paul also confirmed that there will be a stereo camera pair, as well as other accessories, for the Wrap series devices. I’ve seen a picture, and I don’t think people will be disappointed with the approach that they’ve taken for attaching cameras to the device.

The most important part of the conversation was that in which Paul assured me that Vuzix will not be abandoning the optically transparent see-through display market, and that we have a great deal to look forward to. He reaffirmed their commitment to the Augmented Reality market, and told me that he was confident that their products would continue to be well ahead of the curve and offer features unheard of at their price point. Confirming what I’d heard from people like Joe Ludwig, Robert Rice and Ori Inbar, he told me that the AV920 Wrap was an imperfect device, and that he thought it better not to release a product that didn’t meet his company’s own high standards, rather than releasing something which he thinks would have let people down. He reiterated that it had been a mistake to keep a name so similar to that of the AV920 Wrap, said that he regretted having left the AV920 Wrap up on their website for as long as they did after having decided not to release it, and also admitted that there should have been more clarification when the Wrap line was re-envisioned as it was. Yes, it would’ve been nice to have been told.

Having had this reassuring conversation with Paul, I can tell you that I still expect to see great things come out of Vuzix (if anything, my expectation are now higher). Though I’m still quite disappointed by having to continue waiting for a true consumer-oriented see-through HMD, and though I do feel a little led-on, I expect that when we do see one from Vuzix, it will far exceed the expectations initially set by the AV920 Wrap prototype shown at the last CES. More than anything, I was reassured by the frankness with which Paul admitted the unintentional mistakes that had been made in handling the separation of the Wrap line from their ongoing optically transparent display research and development. They’ve been at this for a long time, and I’m convinced that Vuzix would never squander their hard-earned credibility by deliberately deceiving their customers.

See-Through HMD for Consumers Further Off Than Expected

Note: When you’re done reading this, please see my followup post.

Today we have received confirmation from Vuzix CEO Paul Travers that the highly anticipated Vuzix Wrap 920, previously known as the AV920 Wrap, will not, in fact, be a see-through head-mounted display (HMD). It will instead be a “see-around” model. This means that the LCD viewing elements will be opaque, as in previous models, but will be suspended behind a sunglasses-style lens without obstructing the peripheral view around the display. In previous HMD devices this wasn’t generally the case because one doesn’t view the LCD panel and light source directly as one does a typical computer or television monitor. Put simply, an HMD requires focal optics so that your eyes can focus on something so close without giving you a headache.

(See this previous post where I reported on being told by Robert Rice, and then Vuzix, that the AV920 Wrap would, in fact, be a true optical see-through HMD.)

Presumably Vuzix will still be offering a stereo pair camera accessory for the Wrap 920, as was supposed to be produced for the original AV920 Wrap, but it’s hard to know what to expect at this point.

So while this does represent an incremental step forward in Vuzix’s offerings, it isn’t the one we were promised. More importantly, it isn’t the one we’ve all been waiting for.

I am, of course, disappointed by this news. After Lumus Optical went back to the drawing board, as they told Ori Inbar they had done in this interview on his his blog, Vuzix was the only company still promising a see-through head-mounted display for consumers any time soon.

Now? Well, we’re left waiting for:

- Somebody to get serious and invest some real VC money in Lumus

- Sony to produce something using using their holographic waveguide technology

- Konica Minolta to further develop their Holographic Optical Element technology

- Microvision to show that they’re serious by showing something other than a Photoshopped concept illustration (Microvision has been subcontracted to develop a new see-through HMD for the military under the ULTRA-Vis program, but who knows when that might lead to development of a civilian device)

or - something unexpected to show up.

I had been hoping to be able to use a see-through HMD in the ISMAR demo presentation on which I’m working with Seac02 using their awesome LinceoVR software. It looks like we’ll have to make do with the conventional HMDs already at our disposal.

Maybe we’ll still see released products using Vuzix’s touted “Quantum Optics” before we get our quantum computers.

AR Consortium, ARML Spec, Layar 3D

Lots of big AR news these days. Where to start?

Well, there are two big ones today so far:

Robert Rice and Mobilizy are proposing an ARML Specification for mobile AR browsers to the newly formed AR Consortium. The Consortium, with its distinguished list of members, is big news in and of itself. I really, truly hope that Layar chooses to get on board with this. As the other widely recognized player in the mobile AR Browser game so far, I fear they may have the power to make or break this standard. Between the endorsement of Rice (and so, presumably, Neogence), and adoption by Layar and Mobilizy (maker of Wikitude), we could have a real functional standard. If, on the other hand, Layar fails to adopt the spec, it could go the way of VRML if no new competitive players arrive quickly and with support.

And today, Layar announced the upcoming addition of support for dynamic 3D models embedded in their content layers.

If the ARML Spec is made versatile enough to support Layar’s 3D strategy, we could see a real revolution in AR standardization, interoperability, etc. This all goes back to Tish Shute’s fantastic interview with Robert Rice on UGOTrade back in January. Interoperability, standardization, and shared content are the keys here.

It’ll also be interesting to see if Total Immersion and Int13’s upcoming mobile framework will support ARML. Depending on what they produce, that could establish the standard even without adoption by Layar.

Also, as Sergey Ten was quick to point out to me on Twitter, “ARML should include geometry/models and points descriptors/patches so that locations could be recognized by camera.” Given Layar’s 3D announcement, this would be key to their ability to get on board. (Come to think of it, Layar’s announcement may have been prompted by the prospect of Total Immersion and Int13’s entry into the mobile AR Browser fray and what they would bring to it… but that’s tangential and speculative, so I’ll let that notion sit.)

Also, I hear that Mr. Rice’s Neogence has licensed a certain very impressive markerless tracking algorithm. If this is, in fact, the case, then I’m sure he wouldn’t be opposed to the inclusion of optical data-point sets that could be downloaded, based on proximity, and used to register with views of the real world. I myself have been toying with (conceptually only, mind you) the idea of using Google Earth 3D model textures and StreetView imagery as tiles, generated and retrieved based on GPS proximity and heading, to produce more accurate registration. The plausibility of this approach was only reinforced in my head after watching this sweet piece of work by Lee Felaraca today. (See addendum at bottom of post.)

Keep the augmentation coming folks! I can’t wait to see you all at ISMAR!

I’ll leave you with this, in case you haven’t seen it yet:

Addendum:

The reason, incidentally, that I was encouraged by Mr. Felaraca’s work is that a similar technique might be used for generating trackable textures from camera input. Upon revisitation, I’m not exactly sure how that would aid the process of pinpoint registration. My thought is to generate the tiles from previously gathered data and match that against the camera input, as with previously implemented tracking methods. Regardless, the Texture Extraction Experiment is awesome, and would provide an excellent tool for gathering the data used for said tile generation, as well as on-the-fly creation of virtual objects for use in augmented environments.

Augmented Reality Roundup (some of the exciting stuff from the last few months)

Okay. Time for a long overdue update.

First off: a teensy bit of self promotion. I know the demo is still VR, not AR, but give a little more time on that. In the meantime, here’s another vid of me and my glove, this time at Notacon (to which the always awesome Matt Joyce dragged me as an auxiliary driver of Nick Farr‘s car), where Jeri Ellsworth and George Sanger of the Fat Man and Circuit Girl Show (some of the sweetest, nicest people ever!) awesomely invited me up on stage to show it off, which I then bumblingly did 😀 This is from a couple of months ago, so the software is as it was in the first video. It’s a little long (I told you it was a bumbling demo! cut me some slack!) so you may want to read the rest of the post first, before the overwhelming lethargy has set in 😉

The software is actually coming along, though it’s still self-contained. There are a few cool new features that’ll be ready to show soon.

So, now I’d like to do a little recap of some of the many interesting developments on the AR scene since my last post. So that’s what I’ll do 😉

I’ll follow this post later (when? I have no idea. don’t hold your breath or plan your week around it. =P) with one exploring the implications of the makers of Wikitude and Layar opening up their APIs and looking for user-generated content. It’s a big deal. In the meantime, read this article in the NY Times… but first read the rest of my post. 😀

Also, I haven’t posted since the introduction of the iPhone 3GS, which contains a compass that will, I think everyone already knows, enable optically referenceless AR apps (with the registration accuracy issues that that entails) on the iPhone. Here’s something you may not have seen yet: Acrossair has used their engine from their Nearest Tube app to write a Nearest Subway app for NYC.

Wikitude, Layar, and Zagat’s NRu apps are all coming to the iPhone 3GS. What can’t yet come the iPhone, however, is full-speed optical AR. This is because Apple still hasn’t released a public API for direct access to the video feed from the iPhone’s camera. Ori Inbar, creator of the Games Alfresco blog (the definitive AR blog, in my opinion and in those of many others), has written a beseeching Open Letter to Apple, gathering the signatures of almost all of the major players in the field.

So… some of the most exciting demos I’ve seen in past few months, from a technical perspective, are…

George Klein‘s iPhone 3G port of his PTAM algorithm:

My understanding of his previous demos was that they utilized a stereo pair. Seeing this kind of markerless environment-tracking on a single-camera device, and a mobile one at that, is extremely exciting.

The second one which REALLY got me psyched was Haque Design+Research‘s Pachube + Arduino + AR demo (they are the initiators and principal developers of Pachube).

I’ve seen a number incredibly cool things done with Pachube, starting with Bill Ward’s Real-world control panel for Second Life. Anyhow, my mind is pretty well blown by the fusion with AR. It’s also worth checking out the Sketchup/Pachube integration video in Haque’s YouTube channel.

And the third would have to be (drumroll, please)…

Eminem?!?!

Yup. This neat bit of AR marketing for Eminem’s new album, Relapse, sets itself apart from other, more “run-of-the-mill” FLARToolkit marketing (run-of-the-mill AR? in what reality am I living?! uhm… yeah… I know… an increasingly augmented one =P), by featuring creative user interaction. This is, so far as I know, the first AR app which allows one to paint a texture onto a marker-placed model.

The fourth is equally surprising, is one of the coolest things I’ve ever seen (specifically because it is useful, and not particularly trying to be cool), and is from the United States Postal Service.

The USPS Priority Mail Virtual Box Simulator (Would one simulate a virtual box? I think one simulates a box, or generates a virtual box… why would you simulate something that’s already virtual? =P) is by far the most practical FLARToolkit consumer-facing AR app I’ve seen to date. Kudos to those involved.

Next up: Aaron Meyers and Jeff Crouse, both heavily involved in the OpenFrameworks community, and the interactive art world in general, created a the very cool game which they call The World Series of ‘Tubing. (Presumably in a nod to the World Series of Poker. Aaron and company were wearing card dealer’s visors when I saw the project demoed at Eyebeam’s MIXER party last month.) Here’s a RocketBoom interview with them.

Marco Tempest‘s AR card trick is superb, and just plain awesome. He’s also just a really nice guy.

Marco’s project was worked on by Zach Lieberman and Theo Watson, the creators and curators of the OpenFrameworks project.

I was lucky enough to see Marco perform his trick live at the OpenFrameworks Knitting Circle, held at Parsons. Aaron Meyers also demoed his kickass work on the World Series of ‘Tubing engine. I haven’t watched the Rocketboom interview yet, but the coolest feature of the ‘Tubing game, the ability to shuttle back and forth through the video clips by rotating the markers, wasn’t actually shared with the participants in the presented incarnation. I actually tried to use it to get an edge when I played, but ended up botching it. It turned out that the player on either side had to tilt their cards towards the other player to accelerate playback (so the directions are reversed, and I would have had to, unintuitively, tip the card to the left to accelerate my clips, as I was on the right side of the stage).

Also awesome and of note is the Georgia Tech/SCAD collaboration, ARhrrrr.

I can’t really argue with blowing up zombies with Skittles. I’d love see something like this implemented using PTAM, so that you could play on any surface with trackable features. I also have some gameplay/conceptual quibbles with ARhrrrr, but it is a technically very impressive piece of work.

Those are the ones that I checked out and in which I saw something I thought to be fundamentally new or to constitute a breakthrough in technology or implementation. Here are some other projects since I last wrote:

There have been animated AR posters for the new Star Trek movie and the upcoming movie Gamer, Blink-182 videos stuck on Doritos bags, etc. Meh. Passive playback crap. I guess it brings something new to the table for somebody who doesn’t know how to rotate a 3D model with a mouse, but I’d rather watch a music video on an iPod than on a simulated screen on a bag on a screen in front of which I’m holding the bag. Dumb. It kinda’ reminds me of the Aquateen Hunger Force episode where Meatwad wins tickets to the Superbowl, and a holographic anthropomorphic corn chip in a sombrero serenades him with this news… except you need to hold it up to your webcam. Whatevs.

Anyhow, hop over to Games Alfresco for comprehensive coverage of the AR field.

Finally, I’ll leave you with TAT. Don’t watch this video if you don’t want to have to pick your jaw up off of the floor. Enjoy 🙂

First public vid of one of my gloves

There are lots of exciting things going on with marker-based AR right now. I’ll get back to covering them soon, after I’ve worked out a few kinks in my own development plan 🙂

In the meantime, here’s a little look at part of what I’m working on.